3 Methods to Set Up a Reverse Proxy on a Home Network

Step-by-step guide to install and configure NGINX, Apache, or Caddy as a reverse proxy for home servers, complete with SSL, security, and troubleshooting tips.

Post Time:2025-04-29

Learn how to scrape user accounts on Instagram and TikTok ethically and responsibly using Python, APIs, and AWS cloud solutions for scalability and efficiency.

Scraping user accounts on platforms, like Instagram or TikTok, can be extremely valuable for market research, influencer analysis, and content strategy. It helps to make data-driven and informed decisions. But again, it needs to be done responsibly and in compliance with legal and ethical standards to avoid unwarranted violations of platform policies.

In this guide, we’ll cover:

Scraping is the process of automatically extracting data from a website. Though scraping is powerful in collecting information, it should be handled sensitively, especially when dealing with social platforms.

Instagram and TikTok have clear terms of service on how their data should be handled. Violating these terms can lead to:

All this means that scraping can only be done ethically:

1. Adhering to Terms of Service

Work always within platforms' guidelines.

2. Use Publicly Available Data Only

Crawl only publicly accessible sites that do not require special permissions.

When conducted responsibly, scraping is beneficial for both businesses and researchers. It enables data collection without infringing on user privacy. Always ensure that your scraping activities do not compromise user data security.

Examples of ethical use cases include:

To scrape effectively and ethically, you need the right tools. Below are the most commonly used technologies for scraping and scaling operations.

Python is the go-to programming language for web scraping due to its versatility and rich ecosystem of libraries:

Both Instagram and TikTok offer legitimate access APIs for approved use cases:

Limitations of APIs: While APIs are a reliable way to collect data, they are often limited, such as rate limits and restricted access compared to web scraping.

AWS(Amazon Web Services) offers the infrastructure needed for large-scale, efficient scraping:

By leveraging AWS, you can manage large-scale scraping operations efficiently.

Following the below steps, you can scrape effectively with ethical standards. Always stay updated on platform policies and legal guidelines to ensure compliance.

It's not complex to use either Anaconda or virtualenv to set up a Python environment. By isolating your projects, you can manage dependencies effectively and avoid conflicts.

Anaconda is a popular distribution of Python that simplifies package management and deployment. It comes with many useful libraries pre-installed.

1. Install Anaconda

Go to the Anaconda website and download the appropriate installer for your operating system (Windows, macOS, or Linux). And follow the installation instructions.

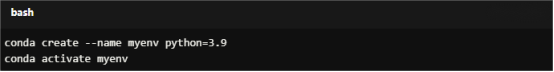

2. Create and Activate a New Environment

On Windows, search for "Anaconda Prompt" in the Start menu. On macOS or Linux, open your terminal.

Run the following command to activate your newly created environment:

For Copy:

conda create --name myenv python=3.9

conda activate myenv

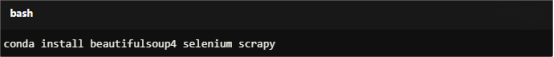

3. Install Required Packages

Once the environment is activated, you can install any necessary packages.

For Copy:

conda install beautifulsoup4 selenium scrapy

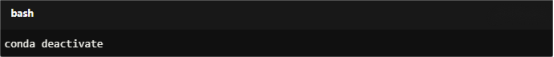

4. Deactivate the Environment

When you’re done working in the environment, you can deactivate it by running:

For Copy:

conda deactivate

virtualenv is a tool to create isolated Python environments. This method requires you to have Python installed on your system.

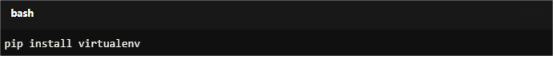

1. Install virtualenv

Open a Terminal/Command Prompt and install virtualenv using pip

For Copy:

pip install virtualenv

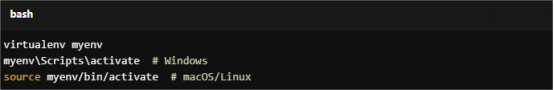

2. Create and Active a New Environment

For Copy:

virtualenv myenv

myenv\Scripts\activate # Windows

source myenv/bin/activate # macOS/Linux

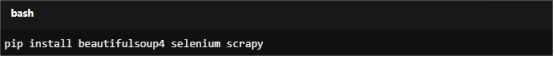

3. Install Required Packages

With the environment activated, install the necessary packages using pip:

For Copy:

pip install beautifulsoup4 selenium scrapy

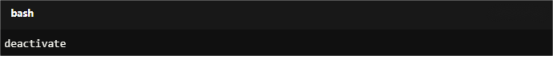

4. Deactivate the Environment

When you’re finished, deactivate the environment by running:

For Copy:

deactivate

Focus on publicly available user data, including:

Avoid Scraping Private or Sensitive Data: Ensure that you only scrape publicly available data to comply with platform terms of service. Respect user privacy and ethical guidelines.

Why Use Proxies?

Scraping can lead to IP bans due to excessive requests from a single IP address. Proxies can help mitigate this risk.

Types of Proxies

1. Residential Proxies: Less likely to be flagged because they appear as regular user traffic. Ideal for scraping social media platforms where detection is common.

2. Rotating Proxies: Change your IP address frequently to distribute requests and avoid detection. Highly effective for large-scale scraping operations that require anonymity.

Deploying web scraping scripts on AWS provides you with the flexibility to scale your operations effectively.

1. Create and Set Up Your AWS Account

Visit the AWS website and sign up for an account. Then log into the AWS Management Console.

2. Launch an EC2 Instance

a. Navigate to EC2 and click on "Launch Instance".

b. Choose an Amazon Machine Image (AMI): Select a suitable AMI, such as Ubuntu Server or Amazon Linux, commonly used for scraping tasks.

c. Configure Instance Details: Adjust necessary settings, such as the network configurations.

d. Launch the Instance and download the key pair for SSH access.

3. Connect to Your EC2 Instance

Open a Terminal or Command Prompt and use SSH to connect:

For Copy:

ssh -i /path/to/your-key.pem ec2-user@your-public-ip

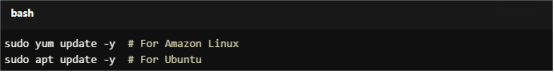

4. Set Up Your Python Environment

a. Update the Package Index

For Copy:

sudo yum update -y # For Amazon Linux

sudo apt update -y # For Ubuntu

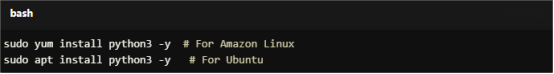

b. Install Python and Pip

For Copy:

sudo yum install python3 -y # For Amazon Linux

sudo apt install python3 -y # For Ubuntu

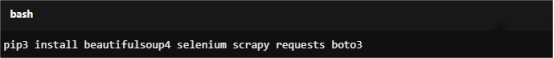

c. Install Required Libraries

For Copy:

pip3 install beautifulsoup4 selenium scrapy requests boto3

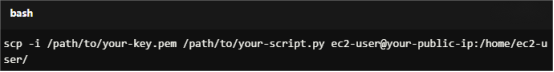

5. Deploy Your Scraping Script

a. Upload Your Script

For Copy:

scp -i /path/to/your-key.pem /path/to/your-script.py ec2-user@your-public-ip:/home/ec2-user/

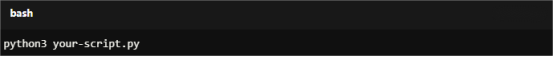

b. Run Your Script

For Copy:

python3 your-script.py

6. Store Scraped Data in S3

a. Create an S3 Bucket

Navigate to S3 in the AWS Management Console and create a bucket.

b. Upload Data to S3

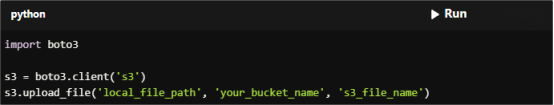

Modify your scraping script to upload data to S3 using the boto3 library.

For Copy:

import boto3

s3 = boto3.client('s3')

s3.upload_file('local_file_path', 'your_bucket_name', 's3_file_name')

1. Create a Lambda function.

2. Upload your scraping code or a zip file.

3. Set up CloudWatch to trigger the function on a schedule.

Organize Scraped Data

Use tools like Pandas in Python to clean and organize your data for analysis.

Responsible Use of Data

Ensure that the data collected is used ethically. Please remove any personally identifiable information (PII), whether using it for research or commercial purposes.

1. Legal and Ethical Risks

Violating platform terms of service could lead to account bans or legal action.

2. Technical Challenges

Dynamic content on TikTok and Instagram (e.g., videos) can complicate scraping. Use headless browsers like Selenium to handle these challenges and consider using tools to solve Captchas.

3. Rate Limits and IP Blocks

Both platforms may block IPs after detecting scraping activity. Using rotating proxies and AWS can help.

1. Scrape Public Data Only

Never attempt to bypass security measures to access private accounts.

2. Respect Platform Policies

Regularly review the latest terms of service on Instagram and TikTok to avoid violations.

3. Implement Rate Limiting

Avoid sending too many requests in a short period to prevent detection.

4. Anonymize Data

Remove personally identifiable information (PII) to protect user privacy.

5. Use APIs Where Possible

Prefer official APIs for accessing data legally, even with rate limitations.

Scraping user accounts on Instagram and TikTok can provide valuable insights for research or business, but it must always be done ethically and in compliance with platform policies. With tools like Python, proxies, and AWS, you can build scalable and efficient scraping operations while staying within ethical boundaries. Ready to start your ethical scraping journey? Explore our rotating residential proxies today and unlock the potential of data collection. Register to get a test chance.

1. Is it legal to scrape user accounts on Instagram and TikTok?

Scraping public data is generally legal, but accessing private data or violating platform terms of service can lead to legal consequences.

2. How does AWS Work for Scalable Scraping?

a. AWS Services for Scraping

EC2 Instances: Run custom scraping scripts with flexible computing power that can scale based on demand.

Lambda Functions: Execute on-demand scraping tasks without managing servers.

CloudWatch: Monitor and log scraping activity to ensure smooth operations.

b. Using AWS to Handle Large-Scale Scraping

AWS allows you to manage massive scraping operations effectively:

Handle multiple requests simultaneously without downtime.

Use load balancers to distribute scraping workloads efficiently.

Cost Optimization Tips for Scraping on AWS:

Use spot instances or auto-scaling groups.

Store data in S3 buckets and analyze it using AWS Glue or Athena.

3. Why use AWS for scraping?

AWS provides scalable and reliable infrastructure (e.g., EC2, Lambda) for managing large-scale scraping operations.

4. What data can I scrape from Instagram and TikTok responsibly?

Publicly available data like usernames, bios, follower counts, and engagement metrics.

< Previous

Next >